REFERENCES

参考文献

Bourlard, H. and Kamp, Y. (1988). Auto-association by multilayer perceptrons and singular value decomposition. Biological Cybernetics, 59, 291–294.

Bojarski, M., Del Testa, D., Dworakowski, D., Firner, B., Flepp, B., Goyal, P., Zhang, X. (2016). End to end learning for self-driving cars. arXiv preprint arXiv:1604.07316.

Doersch, C. (2016). Tutorial on variational autoencoders. arXiv preprint arXiv:1606.05908.

DiCarlo, J. J. (2013). Mechanisms underlying visual object recognition: Humans vs. neurons vs. machines. NIPS Tutorial.

Everitt, BS (1984). An Introduction to Latent Variables Models. Chapman & Hall. ISBN 978- 9401089548.

Fukushima, K. (1980). Neocognitron: A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biological Cybernetics, 36, 193–202.

Gibson, J. (1979), The Ecological Approach to Visual Perception, Boston: Houghton Mifflin.

Hinton, G. E. and Zemel, R. S. (1994). Autoencoders, minimum description length, and Helmholtz free energy. In NIPS’1993 .

Hinton, G. E. and Salakhutdinov, R. (2006). Reducing the dimensionality of data with neural networks. Science, 313(5786), 504–507.

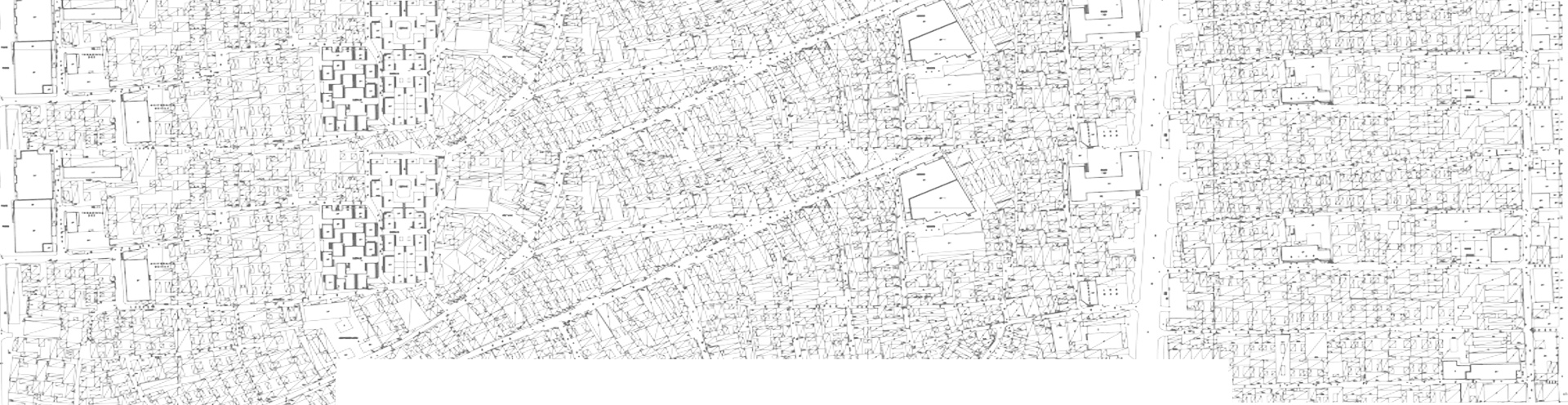

Hillier, Bill. Space is the machine: a configurational theory of architecture. Space Syntax, 2007.

Hubel, D. H. and Wiesel, T. N. (1959). Receptive fields of single neurons in the cat’s striate cortex. Journal of Physiology, 148, 574–591.

Kingma, D. P. (2013). Fast gradient-based inference with continuous latent variable models in auxiliary form. Technical report, arxiv:1306.0733.

Kingma, D. P. and Welling, M. (2014). Auto-encoding variational bayes. In Proceedings of the International Conference on Learning Representations (ICLR).

LeCun, Y., Bottou, L., Bengio, Y., and Haffner, P. (1998b). Gradient based learning applied to document recognition. Proc. IEEE.

LeCun, Y. (1989). Generalization and network design strategies. Technical Report CRG-TR-89-4, University of Toronto.

Rezende, D. J., Mohamed, S., and Wierstra, D. (2014). Stochastic backpropagation and approximate inference in deep generative models. In ICML’2014. Preprint: arXiv:1401.4082.

Sparkes, B. (1996). The Red and the Black: Studies in Greek Pottery. Routledge

Tandy, D. W. (1997). Works and Days: A Translation and Commentary for the Social Sciences. University of California Press.

Varoudis, T. (2012). depthmapX - Multi-Platform Spatial Network Analyses Software. OpenSource.

Yu D., Yao K., Su H., Li G. and F. Seide, KL-divergence regularized deep neural network adaptation for improved large vocabulary speech recognition, 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, 2013, pp. 7893-7897.

Zeiler, Matthew D., Dilip Krishnan, Graham W. Taylor, and Rob Fergus. "Deconvolutional networks." (2010): 2528-2535.

发表于 2020-12-24 10:40

发表于 2020-12-24 10:40

收藏

收藏  支持

支持  反对

反对  回复

回复 呼我

呼我 支持

支持 反对

反对